A Harm Assessment Risk Tool used by the police to cut reoffending rates is at the heart of an increasingly heated debate surrounding the role of algorithms in the criminal justice system

Durham Constabulary had a problem. In an innovative effort to cut the number of people making one-way trips into the justice mill because of minor offences, it introduced a procedure called Checkpoint. This essentially offers certain categories of people, especially women, accused of certain kinds of offences, the option of out-of-court disposals if they sign up for offender management. It seems to work: less than 5% of individuals admitted to Checkpoint reoffend during the programme.

The key is to pick the right candidates: there is no point offering the programme to people who are not going to reoffend anyway. At the other end of the scale, offering a second chance to someone who goes on to rape or murder would be a disaster. Those responsible for making the initial selection – police custody officers – tend to err on the safe side, so Durham commissioned a computerised tool to help them assess risk. It is an algorithmic system initially trained with the outcomes of 104,000 historic ‘custody events’ called the Harm Assessment Risk Tool (HART).

HART is now at the centre of an escalating debate about whether it is appropriate for life-changing decisions about people to be taken by algorithmic processes trained with statistical data. HART’s progenitor, Michael Burton, who retires this week as Durham’s chief constable, made a forthright contribution to the debate. He was one of 80 contributors to a year-long inquiry by a commission set up by Law Society president Christina Blacklaws to examine the use of algorithms in the criminal justice system. The commission reported its findings last week.

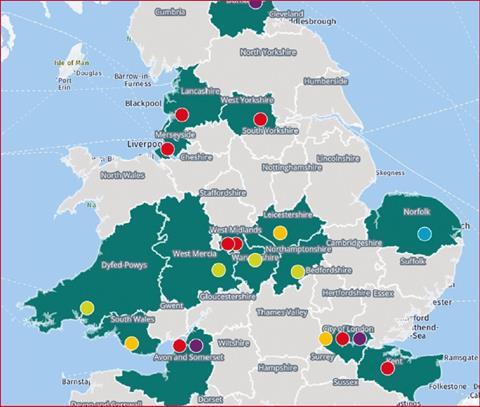

The report was widely covered as the latest in a series of calls for controls, or even outright bans, on algorithm-based decision-making. In fact it is more nuanced than that. The commission concludes that algorithm-based systems could help make criminal justice more efficient and effective – so long as they are designed, procured and operated in a transparent and lawful manner. At the moment, with ad hoc developments and deployments taking place around England and Wales (see map) there is a risk that they are not.

Blacklaws warned of ‘a worrying lack of oversight or framework to mitigate some hefty risks – of unlawful deployment, of discrimination or bias that may be unwittingly built in’.

The commission makes seven sets of recommendations to put things right. These cover:

- Oversight: The lawful basis for the use of any algorithmic systems must be clear and explicitly declared;

- Transparency: The government should create a national register of algorithmic systems used by public bodies;

- Equality: The public sector equality duty must be applied to the use of algorithms in the justice system;

- Human rights: Public bodies must be able to explain what human rights are affected by any complex algorithm they use;

- Human judgement: There must always be human management of complex algorithmic systems;

- Accountability: Public bodies must be able to explain how specific algorithms reach specific decisions; and

- Ownership: Public bodies should own software rather than renting it from suppliers and should manage all political design decisions.

It concludes: ‘The UK has a window of opportunity to become a beacon for a justice system trusted to use technology well, with a social licence to operate and in line with the values and human rights underpinning criminal justice.’

However a launch conference last week heard how, despite the good intentions of its creators, HART failed this test, at least initially. The issue is not so much the algorithm, but the data it is fed to come up with predictions.

Another witness to the commission, Silkie Carlo of lobby group Big Brother Watch, recounted concerns raised by her organisation over the inclusion of data from a ‘consumer classification’ system called Experian Mosaic, which classifies every household in the UK according to 66 detailed types. Carlo alleged that categories such as ‘Asian heritage’ are ‘really quite offensive and crude’. Experian denies this, saying that it relies on more than 450 data variables from a combination of proprietary, public and trusted third party sources. Durham says it has now stopped including Mosaic in its dataset.

The fear is that, as police forces and other arms of the justice system make more use of algorithmic systems to manage resources, identify offenders and predict the outcome of cases, flawed datasets will embed human prejudices in the system. However, as technology entrepreneur Noel Hurly told last week’s event, the issue is not algorithms per se, but the data they rely on: ‘There needs to be a discussion about how to teach machines to forget.’

No comments yet